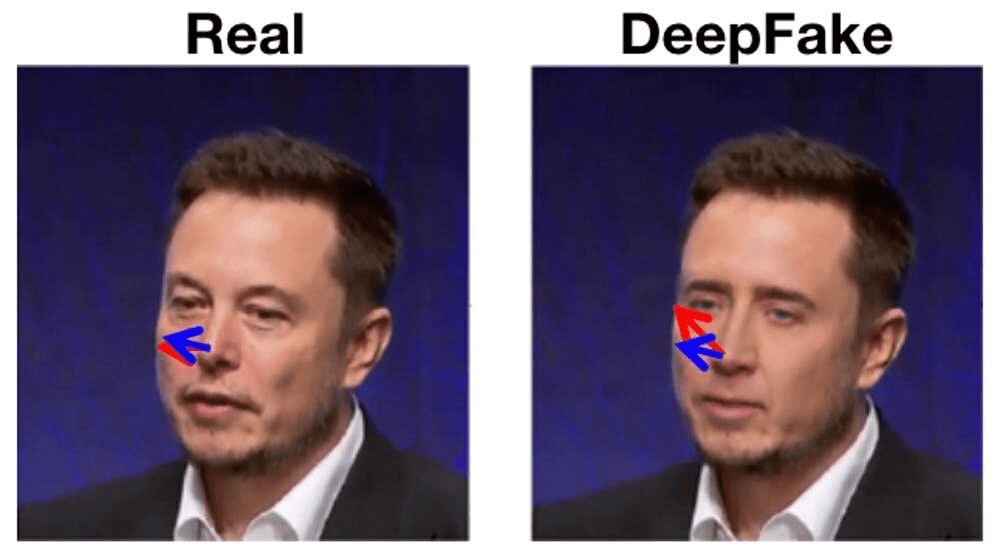

Contrary to what most people think, deepfake projects are not that hard to spot. Although face-swapped images & videos sometimes can look really vivid and real, researchers at the University of Southern California, and the University of California, Berkeley shed some light on this area. Researchers from the universities above are taking a lead by moving forward with new detection technologies. Using a machine-learning mechanism that examines soft biometrics, including but not limited to facial quirks and how a person speaks. Statistically, they achieve 92 to 96 percent accuracy when it comes to detecting deepfakes.

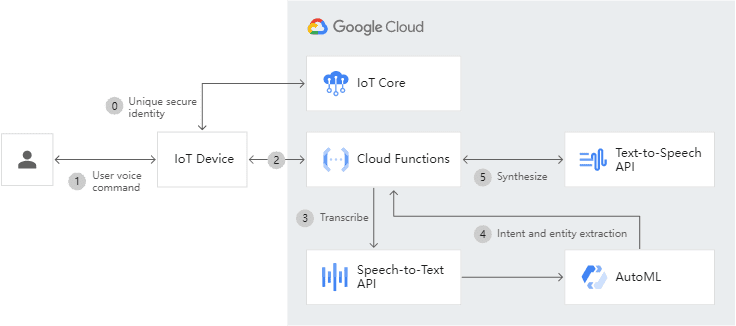

In addition to efforts from leading universities in the US, tech giant like Google is joining the efforts to prevent the malicious use of deepfakes. Google, in order to verify speakers, is working on text-to-speech conversation tools. In this way Alphabet, the parent company of Google demonstrates its corporate social responsibilities by giving back to the whole society.

Moreover, some other high-tech firms are coming up with innovative solutions for a more transparent AI circumstance and protecting people from the “dark sides” of deepswaps. Some of us are afraid of faceswaps, primarily due to the potential consequences of malicious deepfakes going around wildly on the internet & social media. In this aspect, apparently, Twitter and Facebook (Meta) got our backs by banning the use of malicious deepswaps. This should be reassuring, especially for those people who are sensitive to their privates leaked, in today’s highly developed online world.

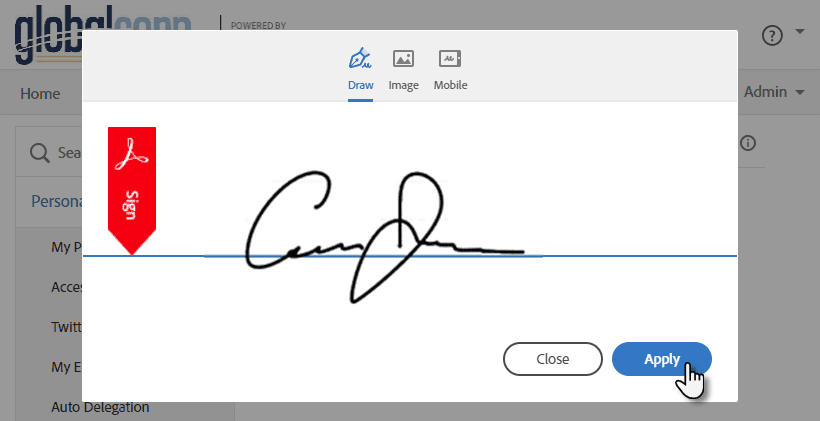

When we think about technology companies, we think about FAANG, together with a bunch of industry leaders competing fiercely against each other. Yet another big player is joining the cooperative efforts to foster more AI transparency, and it’s Adobe. Users of Adobe have the chance to attach signatures to their content after they are done with their masterpieces. Their signatures would have to give details about what their creations are, etc. Currently, it’s in the process of developing a tool, with the assistance of which people can easily tell a manipulated image from a normal one.

Not just the high-tech companies are paying attention to the potential harms of faceswaps. Actually, NGOs (non-government organizations) are also advancing this initiative of protecting people’s privates online. Big groups such as DFDC are actively advocating for cooperative innovations, in an effort to come up with more advanced deepswap identifications. DFDC clearly demonstrated its determinations by sharing a dataset of 124000 videos, featuring 8 programs that for facial modifications.

Additionally, an Amsterdam-based startup, called Deeptrace, is on track to deliver an automated tool capable of face swap identifications. This breakthrough implementation will be similar to antivirus software in that it can scan the audiovisual media in the background. This deepswap antivirus technology will be an important milestone for the whole society, as it will most likely embark on the metaverse in 10/20 years.

The last organization to join this group effort is the U.S. Defense Advanced Research Projects Agency. DARPA is injecting capital into the research of the development of automatic scanning of deepswap technology through a program called Media Forensics, abbreviated as MediFor.

If you are not familiar with the face swap industry and have little idea about the difficulty of spotting deepfakes previously, now you should rest assured. You have not only the 92 to 96 percent accuracy, coming from a statistically significant large sample, but also the support from various sources ranging from prestigious academic institutions, popular high-tech giants, and socially responsible NGOs.

Let me bring you forward to the next topic and clarify further why faceswap is both legal and safe. First, deepswap is a perfectly legal industry, and most people use deepfake technologies for fun and social interactions. Of course, some geeks may take advantage of deepswap to explore the VR/AR world, depending on how you define the metaverse. Only the bad people in the shadow attempt some illegal use of deepfakes such as copyrights misappropriation, or violation of personal privates. Yet the misbehavior of the minority group of people, can not overshadow the benefits face swap brings to society.

Also, it’s always people using the technology & tool, rather than deepfake itself, that’s causing damage in the AI environment. A paralleled analogy would be Tinder, through which some bad guys do harm to society. Yet Tinder as an app is neutral; totally getting rid of this app is not helpful, but having security measures and lining up high-tech weapons against potential misbehavior is the ultimate solution.

The same logic applies here. If you are still afraid of face swap technology, think about the risks of going out during the night with friends. You can always run into bad guys at night, but can you say no to your friend’s birthday party invitation? Can you reject your friend’s farewell party? Deepswap is unique in that it’s not only a tool bringing joy to your life by making fun of friends. It’s an AI tool, which sets the stage for the metaverse by allowing us to take multinational identities by wearing any face we want on our bodies.

If you don’t want to fall behind the trend of the metaverse, you should consider getting involved because, in the AR/VR world, we will have our lives, jobs, properties, currencies, friends, loved ones, children, etc. But before we jump to this exciting future, we must have faces. Yes, virtual faces that have a one-to-one, unique connection to our real faces in this world, which can be made possible only through today’s deepfake technology.