You might have come across videos online where a politician makes a statement in a video that completely contradicts their political views, or a celebrity says something that doesn’t seem like something they would say.

Yes, that’s right, it’s not actually them. With the rapid advancement of technology today, people can now use AI to deeply fake a person’s appearance.

One of the key technologies involved is face swapping. It’s like an effortless, seamless Photoshop technique that can easily change faces in photos/videos.

In this article, I will explain in a simple way how this technology is implemented and its various applications.

Besides carefully examining with the naked eye, how do we generally assess the effectiveness of a face swap?

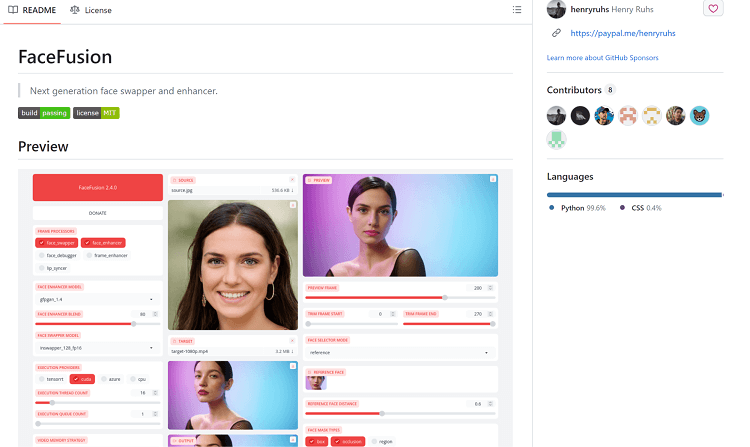

And what are some of the better face swapping models and products available on the market?

What is face swap?

Face swapping is a technique that uses artificial intelligence (AI) to super-impose a person’s face onto a photo or video while preserving the original face’s expressions, eye and mouth movements, pose, lighting, and background.

The goal is to maintain the context of the rest of the body and environment.

It is also performed on videos, and the same facial expressions, eye and mouth movements of the source are carried over to the target.

What can face swap do?

Face swapping technique has many applications:

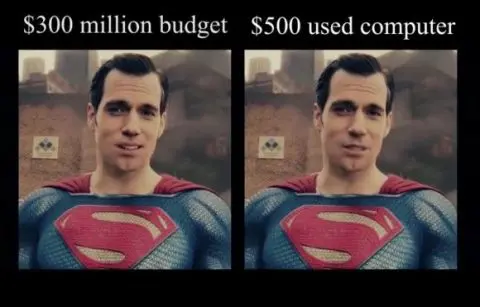

Movies and Television: Face swapping is used to create special effects, such as de-aging actors, bringing deceased actors back to screen, or enabling a single actor to play multiple roles.

Memes and Parodies: Face swapping is popular for creating humorous content on the internet.

Social Media: Apps use face swapping for fun filters and features that let users swap faces with friends or celebrities

Virtual Conferences: Participants can swap faces to maintain privacy while still showing facial expressions

Video Games: It can be used to personalize characters by allowing players to insert their own faces into the game.

Psychological Therapy: It can be used in therapy sessions to help patients overcome certain phobias or conditions by replaying different scenarios to them.

How does face swap work?

In the process of face-swapping, there are three objects involved:

(1) Target photo/video, which is the material where you want to replace the face while keeping all other elements consistent.

(2) Source photo, which is the source of the face you want to swap.

(3) Result photo/video, which is the final product after the face has been swapped.

The process of face swapping is complicated. It involves several key steps:

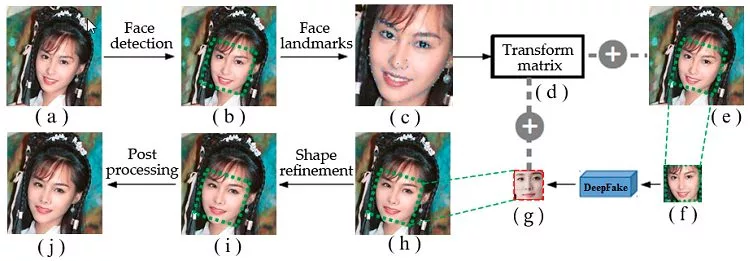

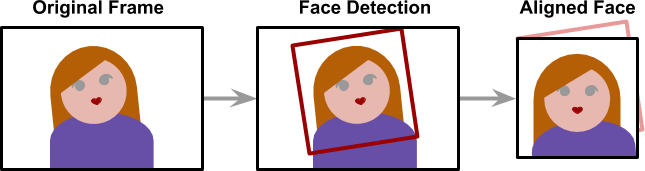

Step 1: Face Detection

The first step is to detect and locate faces in the source and target images or videos. This is typically done using computer vision algorithms that identify facial features.

Several algorithms(especially the CNNs) can be used for face detection:

Deep Learning (Convolutional Neural Networks — CNNs)

Training: CNNs are trained in large datasets of images containing faces. The network learns to recognize the complex patterns and structures of faces.

Inference: The trained CNN is used to predict the presence and location of faces in new images.

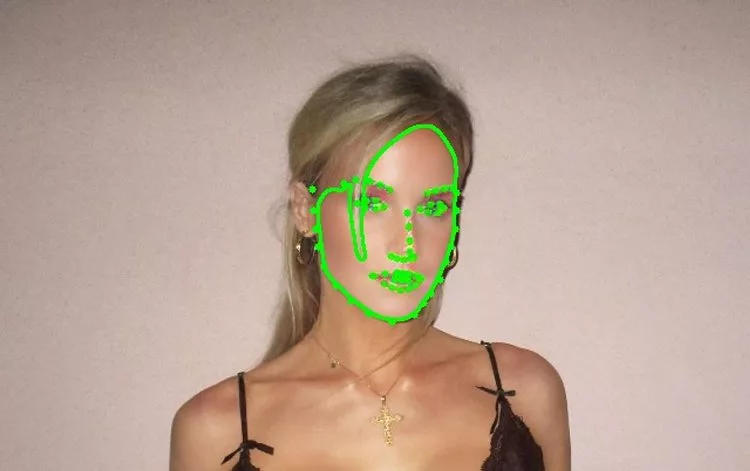

Step2: Facial Landmarks Detection

Once faces are detected, the next step is to identify specific facial landmarks or key points.

CNNs or other deep learning models identify specific facial landmarks or key points, corresponding to important facial features like eyes, nose, mouth, and jawline.

Typical landmark detection models identify between 68 to 194 key points on a face.

Step 3: Face Alignment

The detected faces are aligned based on the identified landmarks to ensure proper matching of facial features between source and target

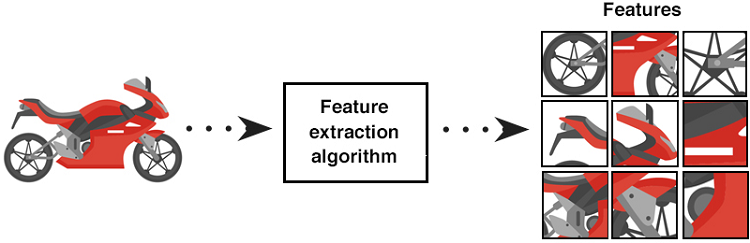

Step 4: Feature Extraction

Deep neural networks, often pre-trained on face recognition tasks, extract identity-related features from the source face.

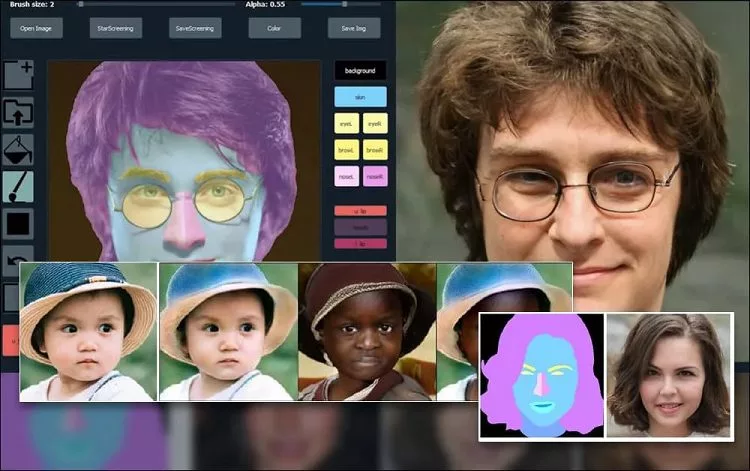

Step 5: Attribute Encoding

A separate network encodes attributes of the target face, such as pose, expression, and lighting conditions.

Step 6: Face Generation

A generator network, typically based on generative adversarial networks (GANs) or autoencoders, combines the identity features from the source face with the attributes of the target face to generate the swapped face.

Step 7: Blending

The generated face is blended with the target image, often using techniques like adaptive attentional denormalization (AAD) to integrate identity and attributes seamlessly.

Step 8: Refinement

Additional networks may be used to refine the result, such as preserving occlusions or improving overall realism.

Step 9: Video Processing

For face swapping in videos, additional steps involve tracking facial movements frame by frame and ensuring temporal coherence.

Step 10: Training

The entire system is typically trained end-to-end using large datasets of face images, often employing techniques like progressive training to achieve high-resolution results.

How to evaluate a face swap?

There are many factors to evaluate a face swap but one of the most important ones is to measure how similar the source and the result

When we compare how similar two images are, we turn the images into a set of numbers (feature vectors) that represent their key features.

These numbers are like a special code that tells us what’s in the image.

We then compare these codes using something called cosine similarity, which is like measuring how close together two arrows are pointing.

If the arrows point of cosine similarity in almost the same direction, it means the images are very similar.

If the arrows point in opposite directions, it means the images are very different.

When we say the cosine similarity is 0.9, it’s like saying the arrows are pointing very close to each other, which means the images have a lot of the same features and look quite alike.

So, a cosine similarity of 0.9 between two images tells us that they look very similar to each other.

DeepSwap has the highest similarity in industry

Unlike other services and products that use open-source AI models, DeepSwap has developed its own AI model over many years.

It has been trained on more than 50,000 images from public datasets such as CelebA and LFW, using data augmentation techniques like rotation, cropping, and color transformation to enhance the diversity of the data.

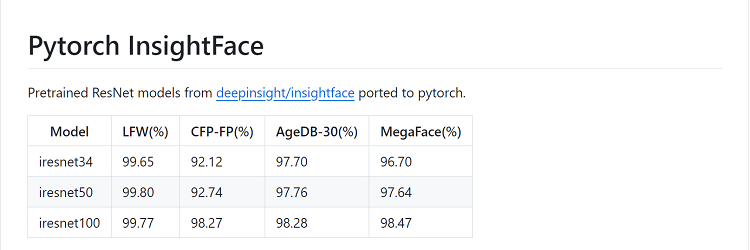

When I tested the similarity of DeepSwap’s face-swapping, I used Pytorch InsightFace to calculate the loss function after the face swap.

After long time test, on average, DeepSwap’s loss function is only 0.06, which means the similarity of its face-swapping effect reaches 1–0.06 = 0.94, or 94%.

Typically, if the similarity exceeds 80%, it becomes quite difficult for the human eye to recognize the differences.

If the number surpasses 90%, the result is virtually indistinguishable from the original person.

In contrast, general open-source models usually achieve a similarity of about 60% to 70%.

Face Swapping Challenges

Typically, the training samples for AI face-swapping are primarily front-facing portraits of individuals looking directly at the camera.

However, users’ diverse face-swapping needs include a variety of complex scenarios that lack sufficient training data samples.

This leads to issues such as low similarity in the swapped images or even distortions and deformations during the face-swapping process.

In videos, there are also instances where some frames lose the face swap.

Here are two common complex scenarios:

One of the biggest challenges is accurately aligning faces with different poses or angles, such as looking down, looking up, and profile views.

Here are a few common complex scenarios:

(1) Large Angles

One of the biggest challenges is accurately aligning faces with different poses or angles, such as looking down, looking up, and side faces.

(2) Occlusion

Handling occlusions (e.g.,hair covering part of the face, glasses, or hands) is particularly challenging.

DeepSwap overcomes the challenges

To address the various changes in complex scenarios, DeepSwap has selected sample data that includes different genders, ages, lighting conditions, poses, expressions, and shooting angles.

For each complex scenario such as Large Angles and Obstructions, at least several hundred thousand pieces of data were used for training, ensuring that the face-swapping effect can adapt to the changes in various clothing scenarios:

(1) Large Angles

Looking Down:

Side Faces:

(2) Occlusions

Others:

DeepSwap:

Conclusion

Successful AI face swapping involves many steps, including facial recognition, face alignment, extracting and encoding key facial features from both the source and target, then generating the corresponding face, and finally blending and enhancing it.

Cosine similarity is used to measure the likeness of a face swap. In my tests, DeepSwap, which uses a proprietary model, performed the best among many open-source models.

Moreover, DeepSwap’s performance in overcoming complex scenarios is also the most stable.